Abstract

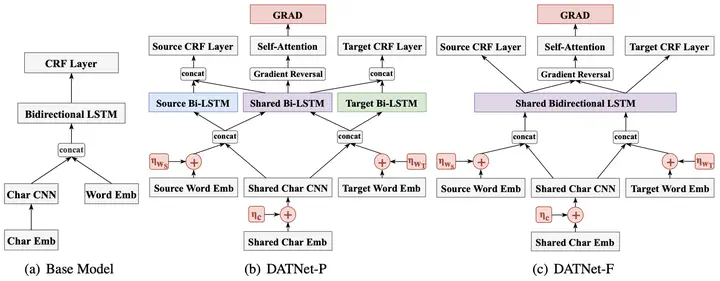

We propose a new architecture for addressing sequence labeling, termed Dual Adversarial Transfer Network (DATNet). Specifically, the proposed DATNet includes two variants, i.e., DATNet-F and DATNet-P, which are proposed to explore effective feature fusion between high and low resource. To address the noisy and imbalanced training data, we propose a novel Generalized Resource-Adversarial Discriminator (GRAD) and adopt adversarial training to boost model generalization. We investigate the effects of different components of DATNet across different domains and languages, and show that significant improvement can be obtained especially for low-resource data. Without augmenting any additional hand-crafted features, we achieve state-of-the-art performances on CoNLL, Twitter, PTB-WSJ, OntoNotes and Universal Dependencies with three popular sequence labeling tasks, i.e. Named entity recognition (NER), Part-of-Speech (POS) Tagging and Chunking.