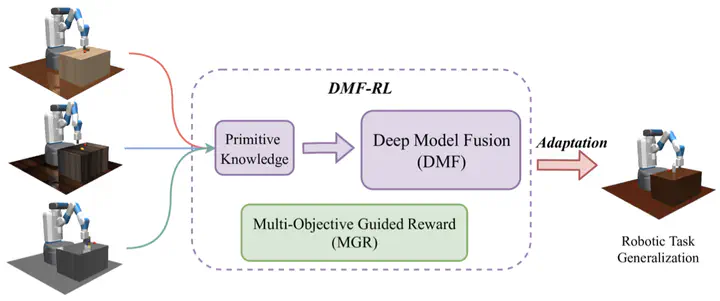

Efficient Robotic Task Generalization Using Deep Model Fusion Reinforcement Learning

Abstract

Learning-based methods have been used to program robotic tasks in recent years. However, extensive training is usually required not only for the initial task learning but also for generalizing the learned model to the same task but in different environments. In this paper, we propose a novel Deep Reinforcement Learning algorithm for efficient task generalization and environment adaptation in the robotic task learning problem. The proposed method is able to efficiently generalize the previously learned task by model fusion to solve the environment adaptation problem. The proposed Deep Model Fusion (DMF) method reuses and combines the previously trained model to improve the learning efficiency and results. Besides, we also introduce a Multi-objective Guided Reward (MGR) shaping technique to further improve training efficiency. The proposed method was benchmarked with previous methods in various environments to validate its effectiveness.