DVD: Dynamic Contrastive Decoding for Knowledge Amplification in Multi-Document Question Answering

Abstract

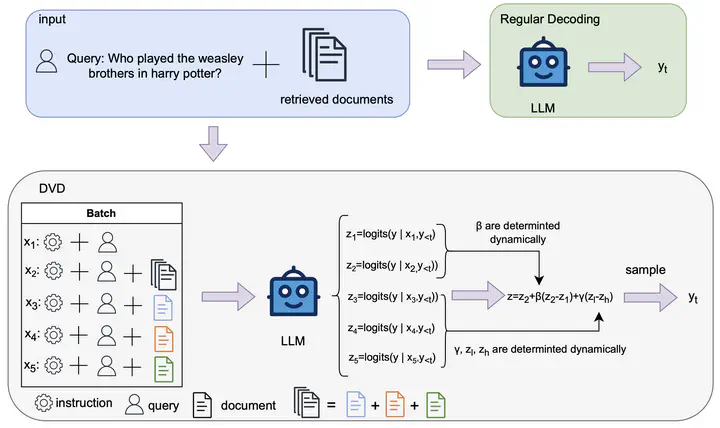

Large language models (LLMs) are widely used in question-answering (QA) systems but often generate information with hallucinations. Retrieval-augmented generation (RAG) offers a potential remedy, yet the uneven retrieval quality and irrelevant contents may distract LLMs. In this work, we address these issues at the generation phase by treating RAG as a multi-document QA task. We propose a novel decoding strategy, Dynamic Contrastive Decoding (DVD), which dynamically amplifies knowledge from selected documents during the generation phase. DVD involves constructing inputs batchwise, designing new selection criteria to identify documents worth amplifying, and applying contrastive decoding with a specialized weight calculation to adjust the final logits used for sampling answer tokens. Zero-shot experimental results on ALCE-ASQA and NQ benchmark show that our method outperforms other decoding strategies. Additionally, we conduct experiments to validate the effectiveness of our selection criteria, weight calculation, and general multi-document scenarios. Our method requires no training and can be integrated with other methods to improve the RAG performance. Our codes are submitted with the paper and will be publicly available.